May, 5th - A new drawing is available, called “Panegyric”

2024

"Panegyric"

VM Storage with XFS for High I/O Operations

April, 9th - The efficiency of virtual machines (VMs) is often constrained by the underlying storage system’s ability to handle high volumes of read and write operations. For Fedora users setting up a VM that will experience intense I/O activities, formatting a disk with the XFS filesystem is a (more) than valid option. Let’s look at the process of preparing a disk with XFS on Fedora, especially tailored for VM storage that anticipates a high number of reads and writes.

XFS

XFS, known for its high performance and scalability, is a 64-bit journaling filesystem. Originally from Silicon Graphics, Inc (SGI) in 1993, it was engineered to support voluminous filesystems and deliver exceptional performance, particularly in environments that require managing large files and facilitating high scalability, all characteristics of VM storage.

XFS for VM Storage with High I/O Demand

1. Good I/O Throughput:

XFS’s architecture is optimised for high throughput, making it suitable for handling numerous simultaneous read and write operations. This is very important for VM environments where disk I/O operations are constant and performance is a known bottleneck.

2. Dynamic Scalability:

The ability to efficiently manage large files and filesystems ensures that VM storage can be scaled dynamically in response to the needs of the system, without compromising on performance.

3. Robust Journaling:

The journaling mechanism of XFS guarantees the integrity of the filesystem in the event of unexpected shutdowns or system crashes, protecting against data corruption during high I/O periods.

4. Advanced Management Features:

XFS includes features such as allocation groups for enhanced disk access, online defragmentation to maintain optimal performance, and online resizing to adjust resources dynamically — all of which are beneficial under heavy I/O load.

Preparing a Disk with XFS on Fedora

Before jumping in on formatting, make sure you back up any existing data on the disk to avoid loss. The following steps will guide you through preparing a disk with XFS for your VM on Fedora.

Step 1: Install XFS Utilities

Begin by installing the XFS utilities on Fedora (if you don’t have them already):

sudo dnf install xfsprogs

Step 2: Choose the Target Disk

Use the lsblk command to list all disks and identify the one intended for formatting:

lsblk

Look for the disk through its size or identifier, like /dev/sdX.

Step 3: Format the Disk

After locating your disk, format it to XFS:

sudo mkfs.xfs /dev/sdX

Replace /dev/sdX with the actual disk path.

Step 4: Mount the Formatted Disk

Create a mount point and mount the formatted disk:

sudo mkdir /mnt/vm_storage

sudo mount /dev/sdX /mnt/vm_storage

Step 5: Ensure Automatic Mount at Boot

To mount the disk automatically upon boot, modify the /etc/fstab file:

- Identify your disk’s UUID with

blkid. - Append the following line to

/etc/fstab, substitutingyour-disk-uuidwith the actual UUID:

UUID=your-disk-uuid /mnt/vm_storage xfs defaults 0 0

You can also read this in the Linux admin guide.

Syncthing as a service

March, 31st - To make Syncthing start automatically on Fedora, you can use systemd. This approach allows you to start Syncthing automatically at boot time under your user account, ensuring that it’s always running in the background without requiring manual intervention. Here’s how you can do it:

Install Syncthing (if you haven’t already): You can install Syncthing from the default Fedora repositories. Open a terminal and run:

sudo dnf install syncthingEnable and start Syncthing service for your user:

systemdmanages services through units. For each user,systemdcan manage a separate instance of the Syncthing service. This is done by enabling and starting a “user” service, not a “system” service. This means the service will start automatically when your user logs in and will run under your user’s permissions.Enable the service: This step tells

systemdto start the Syncthing service automatically on user login.systemctl --user enable syncthing.serviceStart the service now: To start the Syncthing service immediately without waiting for the next login or reboot, use the following command:

systemctl --user start syncthing.service

Verify the service is running: You can check that the Syncthing service is active and running with:

systemctl --user status syncthing.serviceAccessing Syncthing: By default, Syncthing’s web interface will be available at

http://localhost:8384/. You can use your web browser to access it and configure your folders and devices.Optional - Enable service to start at boot: While the above steps will start Syncthing on user login, if you want the service to start at boot time (before login), you will need to enable lingering for your user. This tells

systemdto start user services at boot instead of at login. Run:sudo loginctl enable-linger $USERThis command makes

systemdstart user services at boot time. It’s particularly useful if Syncthing needs to run on a headless server or a system where you don’t log in graphically.

Syncthing should now automatically start for your user account on Fedora, either at user login or at boot time (if lingering is enabled).

Restricting SSH connections

March, 29th - Increasing your system’s security by limiting SSH access to a single, specified machine (is this example, within your Local Area Network (LAN)) is always a good strategic approach. This method focuses on configuring firewall rules to ensure only a designated device can connect.

Determine the Permitted Laptop’s IP Address

Firstly, you need to identify the IP address of the machine allowed to SSH into your system. On Fedora or macOS, this can typically be found by accessing the network settings or by executing the ip addr command in the terminal.

Suppose the allowed laptop’s IP address is 192.168.1.5.

Configuring the Firewall with firewalld

Fedora uses firewalld for firewall management, allowing you to define precise access control rules:

Implement a Rich Rule: Add a firewall rule to permit SSH connections only from your specified IP address by executing:

sudo firewall-cmd --permanent --zone=public --add-rich-rule='rule family="ipv4" source address="192.168.1.5" service name="ssh" accept'This command creates a permanent rule in the public zone, authorizing SSH connections exclusively from the IP address

192.168.1.5.Reload the Firewall: To activate your configuration, reload the firewall:

sudo firewall-cmd --reload

Verify Your Firewall Configuration

Ensure your settings are correctly applied by listing all active rules:

sudo firewall-cmd --list-rich-rules --zone=public

This command will display the rich rules within the public zone, including your SSH connection restriction.

Important Considerations

- It’s assumed that the Fedora system and the laptop are on the same network subnet (

192.168.1.0/24). You may need to adjust your setup according to your network’s configuration. - Ensure the laptop’s IP address is static or reserved in your DHCP server settings to prevent connection issues due to dynamic IP assignment.

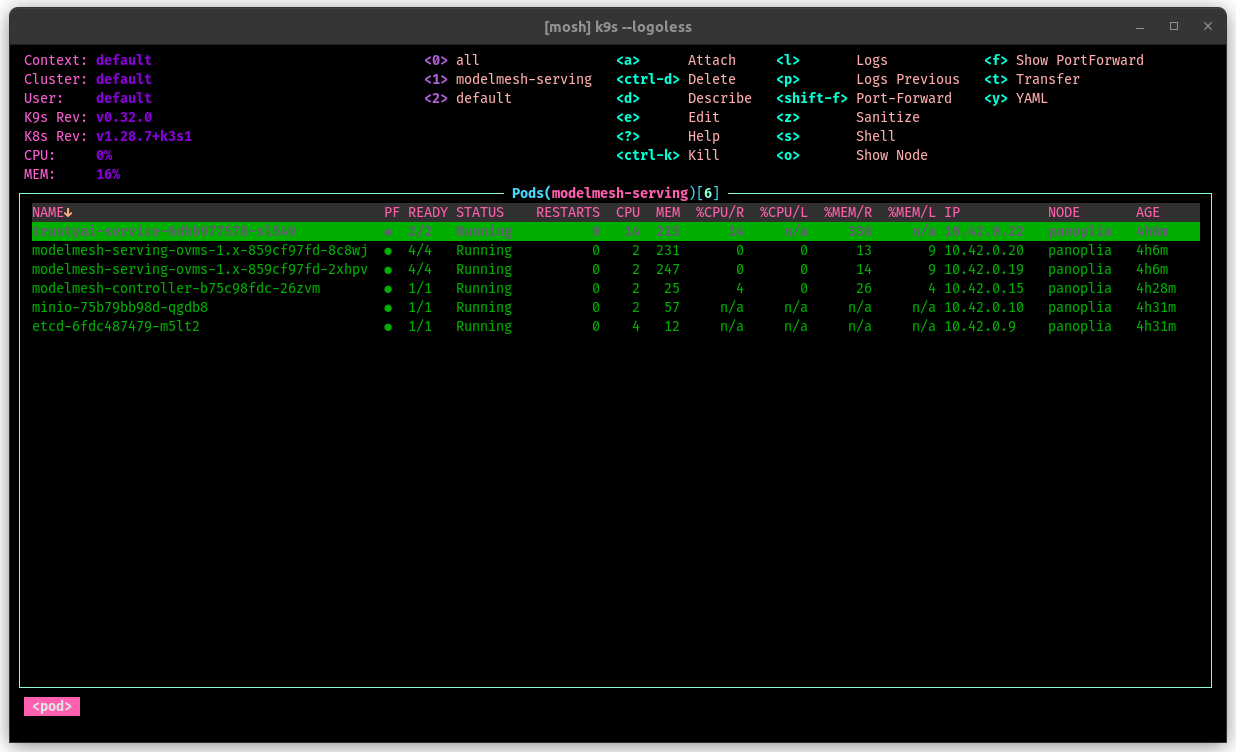

k9s vaporwave skin

March, 3rd - I’ve made a k9s vaporwave skin. You can find it here.

Hint: pairs well with the Emacs vaporwave icon

Kubernetes leases

February, 23rd - If you’re developing or managing a Kubernetes operator, you might encounter an error similar to this:

E0223 11:11:46.086693 1 leaderelection.go:330] error retrieving resource lock system/b7e9931f.trustyai.opendatahub.io: leases.coordination.k8s.io "b7e9931f.trustyai.opendatahub.io" is forbidden: User "system:serviceaccount:system:trustyai-service-operator-controller-manager" cannot get resource "leases" in API group "coordination.k8s.io" in the namespace "system".

This error indicates that the operator’s service account lacks the necessary permissions to access the leases resource in the coordination.k8s.io API group, necessary for tasks like leader election. Here’s how to fix this issue by granting the appropriate permissions.

Adding Permissions in the Controller

To address this, you can use Kubebuilder annotations to ensure your controller has the necessary RBAC permissions. Add the following line to your controller’s code:

//+kubebuilder:rbac:groups=coordination.k8s.io,resources=leases,verbs=get;list;watch;create;update;patch;delete

This annotation tells Kubebuilder to generate the required RBAC permissions for managing leases. After adding it, regenerate your RBAC configuration and apply it to your cluster.

Alternatively, Manually Update Roles

If you’re not using Kubebuilder or prefer a manual approach, you can directly edit your RBAC roles. Create or update a ClusterRole with the necessary permissions:

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: lease-access

rules:

- apiGroups: ["coordination.k8s.io"]

resources: ["leases"]

verbs: ["get", "list", "watch", "create", "update", "patch", "delete"]

Then, bind this ClusterRole to your service account using a ClusterRoleBinding.

Understanding Leases and Coordination

The leases resource in the coordination.k8s.io API group is important for implementing leader election in Kubernetes. Leader election ensures that only one instance of your operator is active at a time and preventing conflicts. You can read more on leases in here.

KServe Deployment: Increase inotify Watches

Deploying KServe on Kubernetes can run into a “too many open files” error, especially when using Kind. This error is due to a low limit of inotify watches, which are needed to track file changes.

KinD users often face this issue, detailed in their known issues page. The solution is to increase the inotify watches limit:

sysctl fs.inotify.max_user_watches=524288

This command raises the watch limit, facilitating a smoother KServe deployment. For a permanent fix, add the line to your /etc/sysctl.conf or /etc/sysctl.d/ files.

By adjusting this setting, you should avoid common file monitoring errors and enjoy a seamless KServe experience on KinD.

Plan 9 design

January, 28th - An interesting quote from Ron Minnich about Plan 9’s design:

On Plan 9, the rule tends to be: if feature(X) can’t be implemented in a way that works for everything, don’t do it. No special cases allowed;

BSD mascot

January, 21st - There is an interesting post by Jacob Elder where I learned that John Lassetter (of Toy Story, A Bug’s Life, Cars and Cars 2) also created the BSD mascot.

2023

KServe logging

September, 27th - In Kubernetes, labels serve as a mechanism to organize and select groupings of objects. They are key-value pairs associated with objects and can be used to apply operations on a subset of objects. Kustomize is a tool that allows us to manage these configurations efficiently.

Let’s consider a project named foo. The project has various Kubernetes objects labeled with app: foo. Now, suppose there is a requirement to change the label to app: bar. This is where Kustomize’s commonLabels feature comes in handy.

Project Name: foo

Existing Label: app: foo

New Label: app: bar

Assume we have a kustomization.yaml file referencing the resources of project foo:

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

resources:

- deployment.yaml

- service.yaml

To change the label from app: foo to app: bar, we can use the commonLabels field in the kustomization.yaml file:

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

resources:

- deployment.yaml

- service.yaml

commonLabels:

app: bar

When you run kustomize build, it will add or override the existing app label with app: bar on every resource and selector specified in the kustomization.yaml file. This ensures that all the resources are uniformly labeled with app: bar, making them identifiable and manageable under the new label.

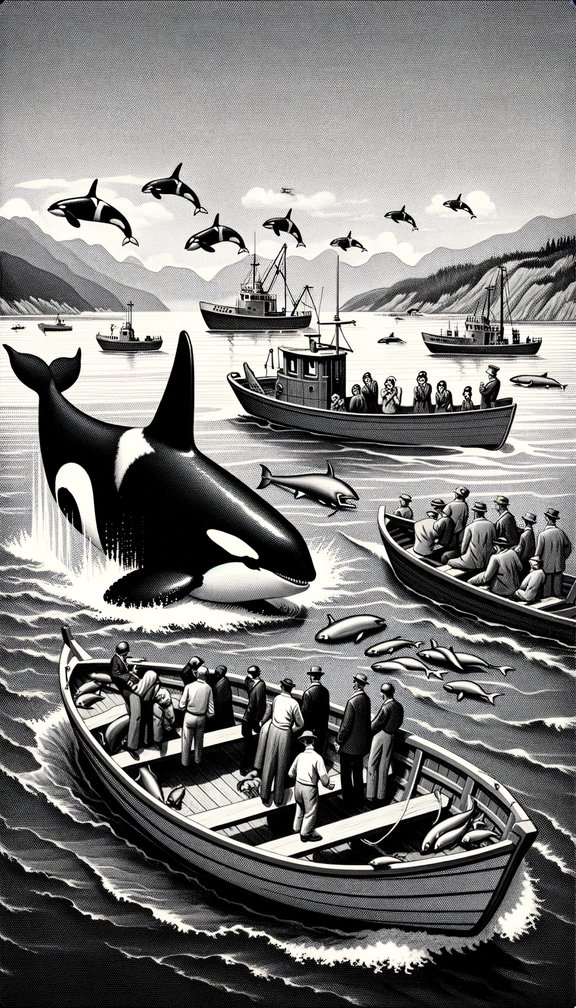

Orca's fashion

September, 18th - The waters around the Iberian Peninsula (mostly reported off the Spanish and Portuguese coasts) have been of interest for marine biologists due to a notable increase in interactions between orcas and boats. Having intricate social structures and intelligence, orcas display behaviours that may be influenced by a multitude of factors such as environmental and social ones.

Scientists postulate that these interactions might be representative of a transient “fad” within orca pods. For perspective, in 1987, there was an observable trend among the southern resident orcas of Puget Sound, who took to carrying dead salmon on their heads. This behaviour, while seemingly anomalous at the time, did not persist.

Modernism and Gazette

September, 16th - Recently, I’ve been reading a fair bit about John Dos Passos, and while I haven’t still read any of his books, his approach to literature seems quite avant-garde for his time. The “USA Trilogy” is frequently cited as a significant exploration of American life in the early 20th century, using techniques like the “Newsreel” and “Camera Eye” to tell his narratives. From the discussions and summaries I’ve encountered, Dos Passos seemed to have a knack for interweaving fiction with the pressing sociopolitical issues of his era. It’s intriguing to think about the depth of his works without having directly experienced them.

Gazette and scroll

I was considering moving the entirety of the blog (not the site, just these pages) to scroll.

Unfortunately, scroll is not a great fit for the rest of the site’s workflow.

At the moment, it’s a bit of a compromise. I’m using the Gazette style for the blog page, but it’s just the CSS. The underlying process of building the site is the same (still using Markdown).

A thought on !important

In CSS, !important is really not a good idea. It’s make debugging very time-consuming and fundamentally breaks the Cascading part of CSS.

Reducing software bugs

Just read Daniel J. Bernstein’s “Some thoughts on security after ten years of qmail 1.0” paper on mitigating software bugs, I found these to be the main takeaways:

Code Reviews Matter: By thoroughly reviewing your code, you can get a sense of its vulnerability to bugs. This isn’t just about finding immediate errors, but evaluating the overall quality of your software-development approach. Adjust your strategies based on these reviews for better outcomes.

Measuring Bugs: There are two primary ways to gauge the ‘bugginess’ of software—either by looking at the bugs per specific task or bugs per line of code. Each approach has its merits. While the former can give insights into the efficiency of a task, the latter can be an indicator of how long debugging might take.

Eliminate Risky Habits: In coding, as in life, some habits are more prone to mistakes than others. Identifying these risky behaviours and addressing them head-on can dramatically cut down on errors. This might even mean reshaping the coding environment or tools used.

The Virtue of Brevity: A larger codebase naturally has more room for errors. If two processes achieve the same result, but one does it with fewer lines of code, the latter is generally preferable. It’s not about cutting corners but about efficiency and clarity.

Access Control: Just as you wouldn’t give every employee a key to the company safe, not all code should have unrestricted access. By architecting systems that limit code permissions, you add another layer of protection against potential issues.

In conclusion, a proactive, thoughtful approach to coding—paired with smart strategies and tools can significantly reduce software bugs.

Issue bankruptcy

Today Lodash declared “issue bankruptcy” and closed 363 issues 325 PRs to start afresh.

KNative missing CRDs

September, 7th - If you’re using Kubernetes 1.27.3 to install KServe 0.9 or 0.10, you might encounter this error:

resource mapping not found for name: "activator" namespace: "knative-serving"

from "https://github.com/knative/serving/releases/download/knative-v1.7.0/serving-core.yaml":

no matches for kind "HorizontalPodAutoscaler" in version "autoscaling/v2beta2"

ensure CRDs are installed first

resource mapping not found for name: "webhook" namespace: "knative-serving"

from "https://github.com/knative/serving/releases/download/knative-v1.7.0/serving-core.yaml":

no matches for kind "HorizontalPodAutoscaler" in version "autoscaling/v2beta2"

Here’s the main issue:

Kubernetes 1.27.3 no longer supports the autoscaling/v2beta2 API version of HorizontalPodAutoscaler. The Kubernetes release notes state:

The autoscaling/v2beta2 API version of

HorizontalPodAutoscaleris no longer served as of v1.26.

Meanwhile, KNative’s release notes indicate that its v1.7 version is validated for Kubernetes v1.24 and v1.25 only, which leads to this compatibility problem.

Possible solutions:

- Downgrade: Use Minikube v1.25 to ensure KNative works without changes.

- Edit the Manifests: Adjust the manifests to resolve the version discrepancy.

- Wait for an Update: A future KNative release will likely fix this compatibility issue.

A note that if you go the Downgrade route, you might see the following warning on your logs:

Warning: autoscaling/v2beta2 HorizontalPodAutoscaler is deprecated in v1.23+, unavailable in v1.26+;

use autoscaling/v2 HorizontalPodAutoscaler

JUnit null and empty sources

September, 5th - Today I learned about JUnit’s ability to use null sources in conjunction with @ParamterizedTest. Example:

@ParameterizedTest

@NullAndEmptySource

@ValueSource(strings = {"test", "JUnit"})

void nullAndEmptyTest(String text) {

Assertions.assertTrue(text == null || text.trim().isEmpty() || "test".equals(text) || "JUnit".equals(text));

}

There’s also @NullSource and @EmptySource.

LunarVim

September, 4th - Been trying out NeoVim in the format of LunarVim.

To use with a GUI on macOS, I use NeoVide, with the command

neovide --neovim-bin ~/.local/bin/lvim --multigrid --maximized --frame none

If you are planning on using Neovim I recommend using a tree-sitter-aware theme. You can find a list here.

My personal favourites are modus and gruvbox-material.

Hatch Poetry

September, 3rd - I’ve been a long-time user of Poetry for my Python projects, and it’s been a welcome change. However, my recent exploration into Hatch has sparked my interest.

Poetry simplifies dependency management with its unified pyproject.toml, but Hatch excels in scenarios requiring complex workflows.

A common personal use-case is Docker multi-stage builds. Hatch, with its conventional requirements.txt and setup.py, offers more granular control, making complex configurations easier.

Hatch also aligns closely with the existing Python ecosystem due to its use of traditional setup files, linking old with new workflows, ensuring a better integration.

For instance, if using a container image manifest such as

# Use a base Python image

FROM python:3.9-slim as base

# Set up a working directory

WORKDIR /app

# Copy requirements and install dependencies

COPY requirements.txt .

RUN pip install -r requirements.txt

# Copy the rest of the application

COPY . .

# Other Docker configurations...

Whereas with Poetry, you might need to install it within the Docker image and use poetry export to generate a requirements.txt equivalent, with Hatch, since it supports the traditional requirements.txt, integration with multi-stage builds can be simpler.

Exception Bubbling in Python

September, 2nd - One aspect of Java that occasionally nudges at me is its explicit approach to exception handling.

Java requires developers to either handle exceptions via try-catch blocks or declare them in method signatures. While it does enforce robustness, it sometimes feels a bit too constrained, especially when compared to the flexible nature of Python.

Recently, I crafted a solution in Python for k8sutils. Instead of the usual explicit exception handling or modifying method signatures, I created a Python decorator - akin to annotations in Java - that substitutes an exception for another without altering the underlying code. Here’s what it looks like:

import functools

import subprocess

def rethrow(exception_type=Exception):

"""Rethrow a CalledProcessError as the specified exception type."""

def decorator(func):

@functools.wraps(func)

def wrapper(*args, **kwargs):

try:

return func(*args, **kwargs)

except subprocess.CalledProcessError as e:

raise exception_type(f"Command failed: {e.cmd}. Error: {e.output}") from e

return wrapper

return decorator

Using this decorator, it becomes straightforward to alter the exception being thrown:

@rethrow(ValueError)

def get(namespace=None):

"""Get all deployments in the cluster."""

cmd = "kubectl get deployments -o json"

if namespace:

cmd += f" -n {namespace}"

result = subprocess.run(cmd, shell=True, check=True, capture_output=True, text=True)

deployments = json.loads(result.stdout)

return deployments

The @rethrow(ValueError) decorator automatically translates a CalledProcessError to a ValueError without the need to change the method’s code.

For another example:

@rethrow(RuntimeError)

def delete_deployment(deployment_name):

"""Delete a specific deployment."""

cmd = f"kubectl delete deployment {deployment_name}"

subprocess.run(cmd, shell=True, check=True)

Here, instead of bubbling up the generic CalledProcessError, any error encountered will raise a RuntimeError.