Bayesian estimation of changepoints

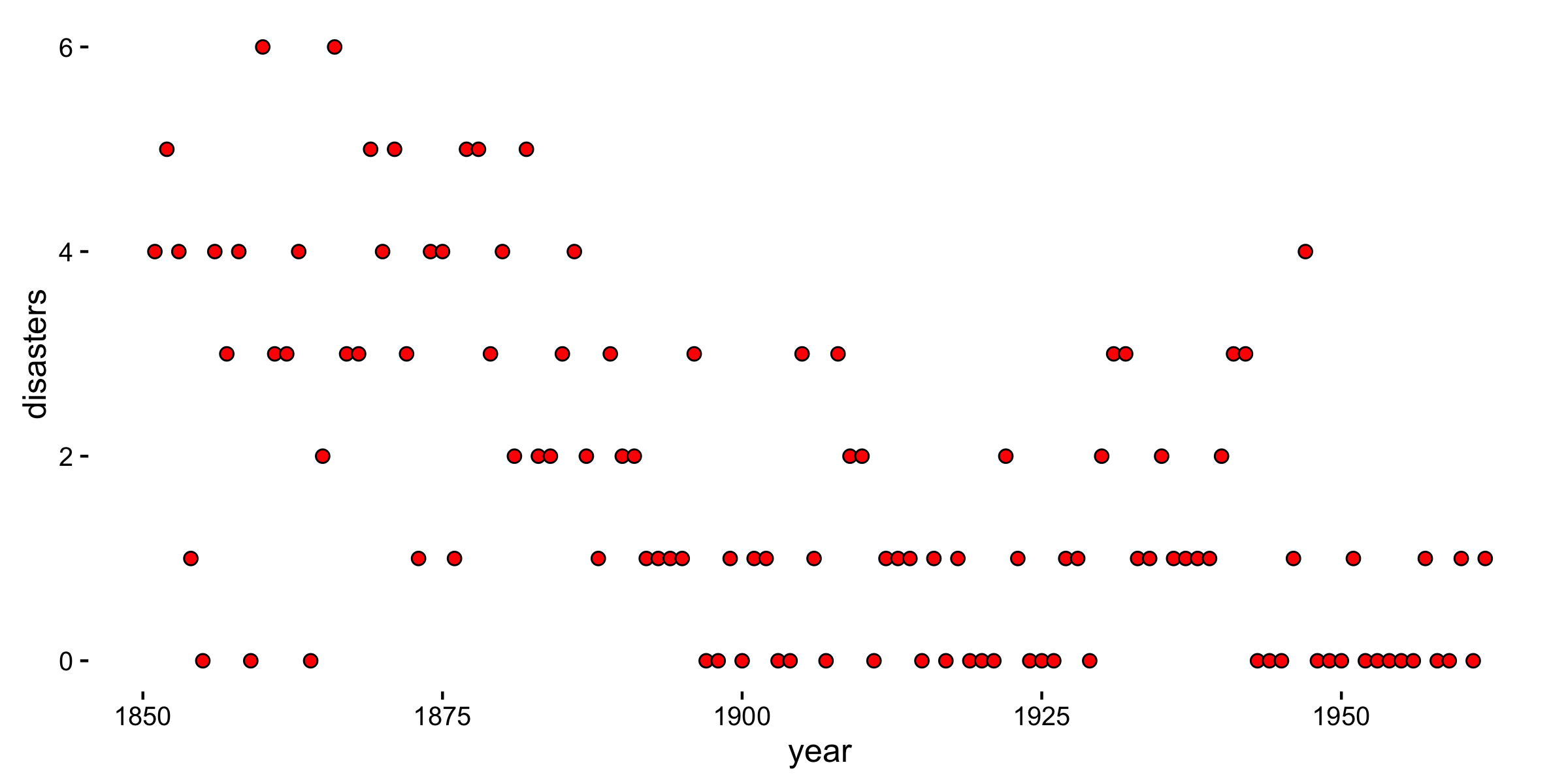

A common introductory problem in Bayesian changepoint detection is the record of UK coal mining disasters from 1851 to 1962. More information can be found in Carlin, Gelfand and Smith (1992).

As we can see from the plot below, the number of yearly disasters ranges from 0 to 6 and we will assume that at some point within this time range a change in the accident rate has occured.

The number of yearly disasters can be modelled as a Poisson with a unknown rate depending on the changepoint

Our objective is to estimate in which year the change occurs (the changepoint

We will use Crystal (with crystal-gsl) to perform the estimation.

We start by placing independent priors on the parameters:

For the remainder we’ll set

The joint posterior of

where the likelihood is

As such, the full joint posterior can be written as:

It follows that the full conditionals are, for

We can define the

def mu_update(data : Array(Int), k : Int, b1 : Float64) : Float64

Gamma.sample(0.5 + data[0..k].sum, k + b1)

end

The full conditional for

which we implement as:

def lambda_update(data : Array(Int), k : Int, b2 : Float64) : Float64

Gamma.sample(0.5 + data[(k+1)..M].sum, M - k + b2)

end

The next step is to take

which we will implement as:

def b1_update(mu : Float64) : Float64

Gamma.sample(0.5, mu + 1.0)

end

def b2_update(lambda : Float64) : Float64

Gamma.sample(0.5, lambda + 1.0)

end

And finally we choose the next year,

where

implemented as

def l(data : Array(Int), k : Int, lambda : Float64, mu : Float64) : Float64

Math::E**((lambda - mu)*k) * (mu / lambda)**(data[0..k].sum)

end

So, let’s start by writing our initials conditions:

iterations = 100000

b1 = 1.0

b2 = 1.0

M = data.size # number of data points

# parameter storage

mus = Array(Float64).new(iterations, 0.0)

lambdas = Array(Float64).new(iterations, 0.0)

ks = Array(Int32).new(iterations, 0)

We can then cast the priors:

mus[0] = Gamma.sample(0.5, b1)

lambdas[0] = Gamma.sample(0.5, b2)

ks[0] = Random.new.rand(M)

And define the main body of our Gibbs sampler:

(1...iterations).map { |i|

k = ks[i-1]

mus[i] = mu_update(data, k, b1)

lambdas[i] = lambda_update(data, k, b2)

b1 = b1_update(mus[i])

b2 = b2_update(lambdas[i])

ks[i] = Multinomial.sample((0...M).map { |kk|

l(data, kk, lambdas[i], mus[i])

})

}

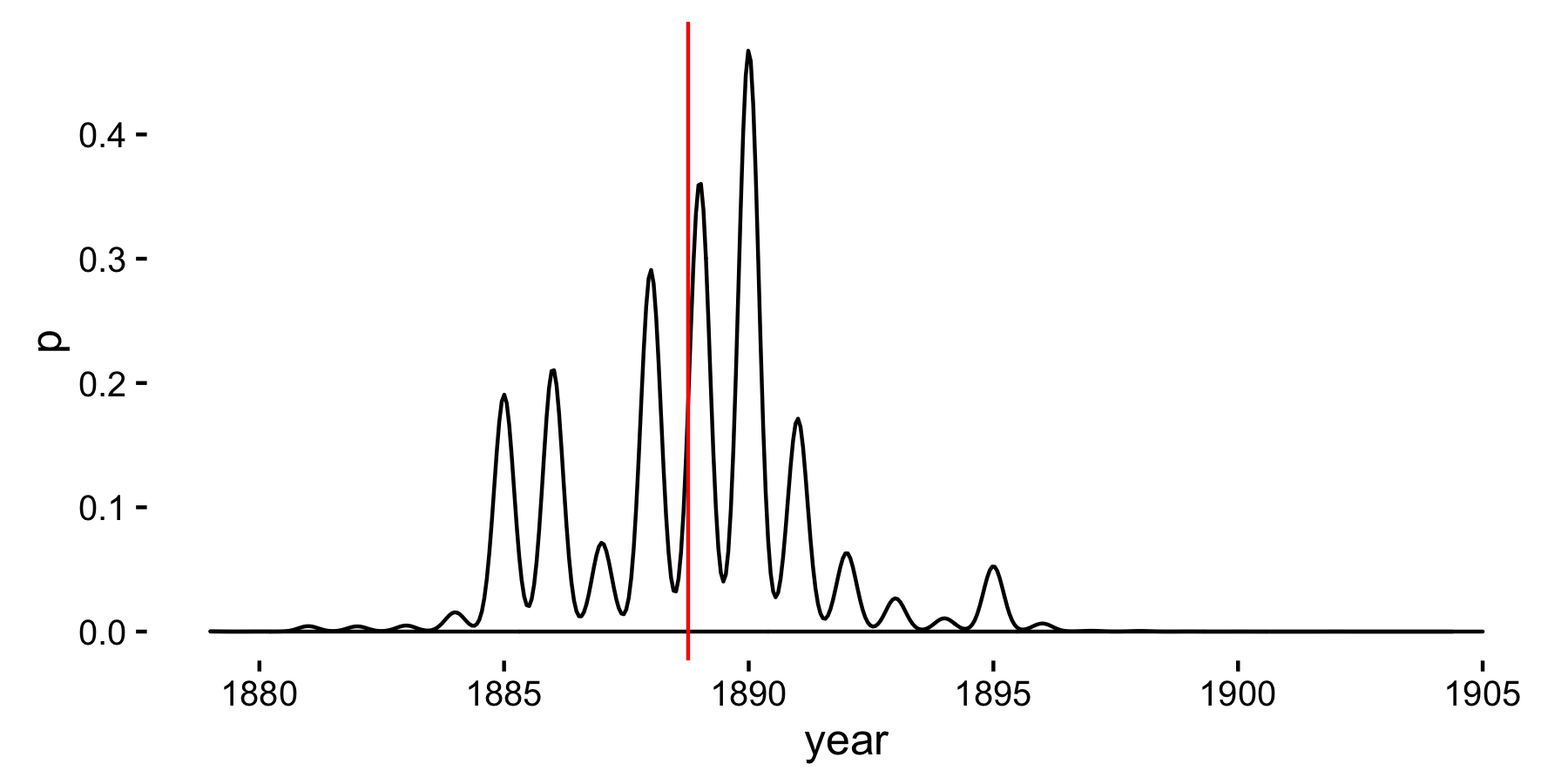

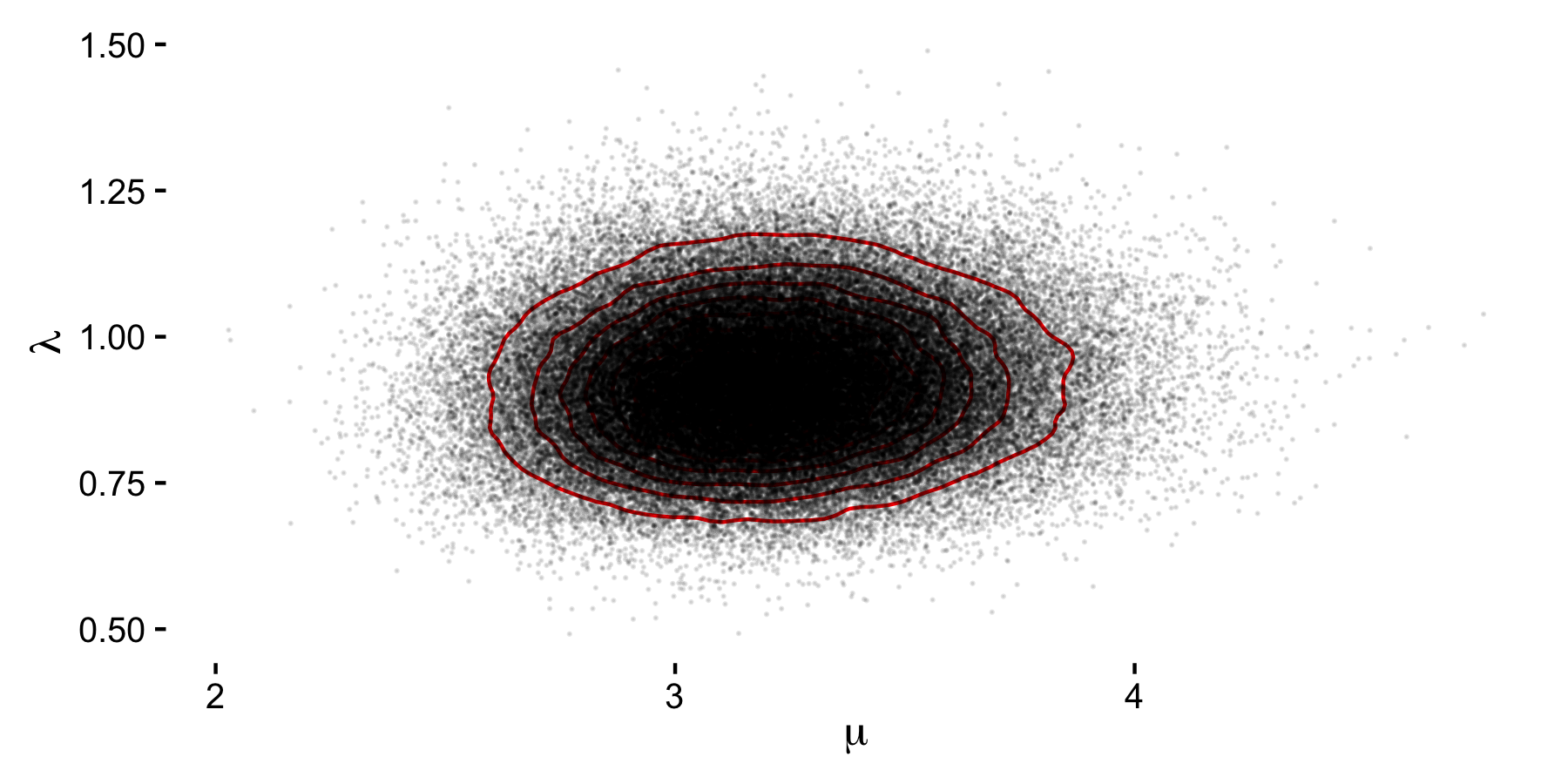

Looking at the results, we see that the mean value of

to indicate that the change in accident rates occurred somewhere near

We can visually check this by looking at the graph below. Also plotted are the density for the accident rates before (

Of course, one the main advantages of implementing the solution in Crystal is not only the boilerplate-free code, but the execution speed.

Compared to an equivalent implementation in ~R~ the Crystal code executed roughly 17 times faster.

| Language | Time (s) |

|---|---|

| R | 58.678 |

| Crystal | 3.587 |